9 min read

45,000,000,000+

GEN AI CREATIONS AS OF Q2 2023

Introduction

Welcome back to The “i” your go-to source for all things generative AI.

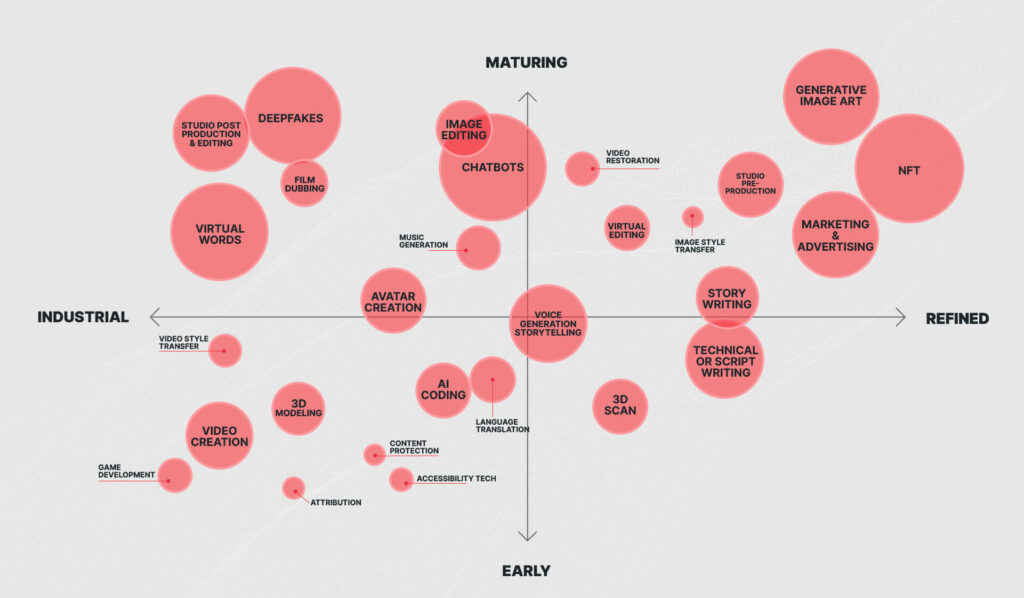

Our latest update includes a refreshed mapping (above) of the progress of different applications of generative AI, as well as our perspective on what’s next for the space; the funding environment; how generative AI is shaping future of work, particularly in Hollywood; continued concerns around unauthorized deepfakes; an engaging Q&A with generative AI expert Nina Schick on the regulatory landscape; and interesting activations we’re seeing in the field.

In the past three months, we’ve seen an overall shift toward the center across our mapping, with several applications in both the upper and lower halves moving toward the x-axis. This is not to say the applications that have moved down are not maturing, but the pace at which that is happening might have slowed; likewise applications that have moved to the right of the page might mean refinement might be less of a focus in that particular area. Notably, chatbots continued to move up the y-axis.

If there’s one thing that’s clear, it’s that we have truly only reached the tip of the iceberg in terms of generative AI’s power to transform the creator economy.

We begin on that note, with a dispatch from our recent conversations in Hollywood.

Progression to Mainstream

Generative AI: Vertical integration comes to the creator economy

Generative AI is still in its nascent stages, but maturation is taking place. One area where this is especially true? The growing consensus that authenticating the inputs that train generative AI engines is necessary in order for widespread adoption of the technology to occur.

The reckoning on AI regulation is not here yet, but it is coming. And when that tide goes out, to paraphrase Warren Buffett, platforms that have relied on unauthorized training data will be caught swimming naked.

With this in mind, we see the roadmap for products across the generative AI landscape going in the following direction:

Authenticating data from the start

Based on our recent conversations with platforms and IP holders — including actors, major studios, writers, digital creators, music labels, gaming companies, and more — it’s clear that no one wants to work with base data that has not been fully licensed. Starting on or doubling down on that path now will only result in legal headaches later on. And as regulations are put into place, solutions will be needed to enforce them quickly and accurately. Existing features like YouTube’s Content ID, for example, that allow copyright holders to identify material that uses content they own simply aren’t built to authenticate AI-generated content or derivatives.

Development of engines for internal and external use

Adopting generative AI models allows IP holders to create their own engines, which can be used by their own teams to speed up and enhance creative processes and, crucially, be opened up for public use to allow fans to engage with beloved IP directly.

Vertical integration comes to the creator economy

Engine-building is a watershed moment for content creators and owners, especially corporate ones. Up until now, IP holders have largely had to rely on third-party platforms to allow their fans to engage with their content digitally. Musicians and music labels, for instance, needed platforms like Spotify or Apple Music to bring their tunes to the digital marketplace. With generative AI, however, content creators and owners can build their own generative AI engines — in essence, owning the entire means of generative AI production and distribution, from the inputs (original content used as training data) to the outputs (derivatives made by the engines). Think of this as a digital equivalent of the Ford River Rouge complex, with hundreds springing up from IP holders around the world in the not-so-distant future.

Growing marketplace for AI-generated goods

As fans get access to external-facing engines, the opportunity — and demand — for the creation of digital and physical goods featuring AI-generated content will skyrocket. Fans will want to make their one-of-one creations into goods that no one else has: everything from NFTs and avatars to t-shirts and stickers — thereby opening up more revenue streams for the owners of the engines and original, authenticated content used to train them.

The bottom line: With generative AI, there’s no need for content owners to engage a middleman in order to open their content up for fan engagement in the digital world. IP holders can engage with the creator economy directly, on generative AI platforms that they own and control, if they invest the time and resources into building their own engines. And as this happens, IP holders will become more and more averse to commingling their IP — that is, allowing their content to be fed into engines that draw upon content from other creators and owners as well, resulting in outputs that are composites of multiple owners’/creators’ styles rather than styles that are uniquely theirs.

To that end, the debate around whether open source or closed technology will dominate the future is a bit misguided. We will see a mix of both, and flip-flops from one to the other. For instance, a company might “open” proprietary technology to allow more people to engage with it, and open source tech might become “closed” for the purposes of monetization.

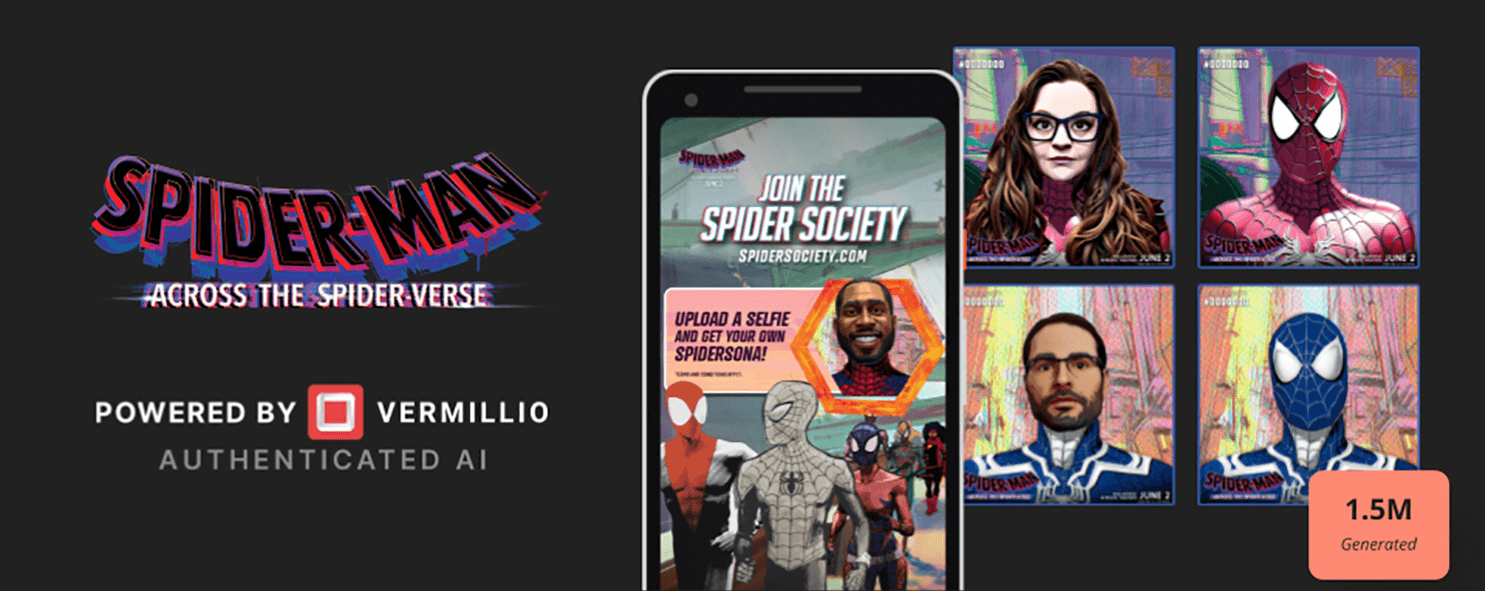

Expect to see more — and farther-reaching — activations like the first-of-its-kind Spider-Verse AI engine our team at Vermillio created in partnership with Sony Pictures Entertainment.

The engine empowers Spider-Man: Across the Spider-Verse fans globally to create their own “Spidersona”, a one-of-a-kind digital version of themselves in the animation style of and in partnership with Spider-Verse artists.

The Spidersona engine is trained exclusively on original, authenticated content from the Spider-Verse films, with thousands of film images and franchise-specific details such as color palettes and lighting. Our platform allows users to seamlessly verify and authenticate who has created what content and where the content is coming from, protecting the rights of creators and IP owners. To date, more than 1.5 million Spidersonas have been created.

Money In

Funding and deals

Investments in the generative AI space in Q2 have not slowed down. In fact, according to PitchBook more than $12 billion worth of deals were announced or completed in Q1 alone. Roughly $1.7 billion was generated across 46 deals in Q1 2023 and an additional $10.68 billion worth of deals were announced in the same quarter but are yet to be completed.

In late June, the AWS cloud unit announced they would be allocating $100 million for a center to help companies use generative AI. Although a small investment for AWS, the announcement demonstrates that AWS recognizes the significance of the current moment in generative AI and doesn’t want to be left behind by rivals Microsoft and Google.

Even more impressive was Databricks’ recent announcement that they are acquiring AI startup MosaicML in a mostly stock deal valued at $1.3 billion. Databricks is focused on bringing down the cost of using generative AI — from tens of millions to hundreds of thousands of dollars per model — meaning that smaller companies can offer similar AI models, but customized with a company’s data at a lower cost.

The video game computer chip maker Nvidia’s stock has tripled this year thanks to the AI boom, briefly gaining a trillion-dollar valuation. Nvidia’s GPU chips are well suited to crunching huge amounts of data necessary to train cutting edge generative AI programs.

As investments in the generative AI space continue at a rapid pace and these tools become more commonplace in our society, there are strong global implications for wealth and efficiency.

According to Goldman Sachs, these tools could drive a 7% or almost $7 trillion increase in global GDP and lift productivity growth by 1.5 percentage points in a 10-year period.

Future of work

Workplace integrations and consequences for workforce

When it comes to the Future of Work and generative AI, the conversation is still centered around AI replacing jobs whereas it should be centered around enhancing creativity. In the visual arts space we are already seeing artists starting to create generative versions of their artwork. The value of their artwork that they drew themselves or painted themselves is going up exponentially. And the reason for that is that the human touch is becoming more and more valuable.

In Hollywood, although there are other issues at play in the writers’ and actors’ strikes, the guild’s actions show that generative AI is already starting to shape labor discussions and movements, as these are the first large-scale attempts by labor unions to pressure an industry to regulate and, in some cases, restrict applications of AI that could be used to replace workers.

Elsewhere in Hollywood, the Directors Guild and top studios and streamers struck a tentative deal on a new three-year labor contract. The agreement confirmed that AI is not a person and that generative AI cannot replace the duties performed by DGA members and we could not agree more. We’re pleased with this significant milestone that will only take creativity to the next level.

We adamantly believe that generative AI at its best will enhance creativity and productivity.

In fact, we believe that most creators — including writers — will eventually want their own personalized engines to use as a tool to brainstorm, test ideas, prompt new thinking, and more for infinite inspiration. Think about having your own machine to assist you in the creative process, providing inspiration from a number of different angles. Personalized engines and the hypercharged creativity that comes with them are the logical next step.

However — crucially — we believe this can only be done if generative AI is Authenticated AI.

Without it, there’s real concern that creators’ work will get ripped off by companies using their content to train their engines. If everyone gets around the table with Authenticated AI, creatives will be fairly credited and compensated for their work and the opportunities for Hollywood and beyond are truly endless.

More Fake

Concerns for deepfakes

Season 7 of Black Mirror opens with a parable about the dangers of AI: an average woman is horrified when she learns that a global streaming platform has launched a prestige TV drama adaptation of her life. The episode is fairly realistic in its depiction of AI’s capabilities. The woman’s data has been ingested by a personalized engine that generates video content, nearly in real time. If such functionalities aren’t possible yet they may be soon.

Black Mirror is tapping into fears about how AI will interact with our data. Sucked into large models and spit back out in new forms, our 0s and 1s can be transformed into new shapes that belie their origins. In “deepfakes”, we can be made to appear to do things we never would in real life.

Deepfakes have already permeated our political life (for example, worries about how a flood of AI-generated fake videos will spread misinformation in the 2024 election) — and new concerns about identity theft are cropping up, too.

The EU AI Act’s framework attempts some protectionary guidance, requiring developers to publish summaries of copyrighted data used for training purposes, so that creators can be remunerated for the use of their work. But the act may go too far, unnecessarily curtailing innovation by placing disproportionate compliance costs and liability risks on developers.

However, that’s not to say there’s no upside to this technology. As celebrities have less time, generative AI and deepfakes can help them continue to connect to their fans. Think about birthday messages from your favorite celeb, but instead of them having to record it themselves, AI technology does it for them.

As outlined above, tech may solve many of the same problems without sacrificing a strong innovation environment. The ability to license training data will protect individual users and creators, propelling AI forward into a new era of safe, personalized use.

Regulation

Or Lack Thereof

Though no country seems to have cracked the code yet on how best to regulate generative AI, there have been some interesting developments in the past quarter in the regulatory landscape, particularly in the UK and Europe. To shed light on this, Vermillio’s co-founder and CEO Dan Neely sat down with UK-based generative AI expert Nina Schick.

The EU’s AI Act requires providers and users of AI to comply with new rules on data and data governance. How do you see that playing out, concretely?

I think this is ultimately the million-dollar question when it comes to EU regulation. There’s no doubt that the EU is leading on AI regulation — the EU’s AI Act is the first transnational piece of regulation focused on artificial intelligence. Although it has been in the works for numerous years, there was no mention of generative AI until ChatGPT came out in November of last year. Now, policymakers understand that this is going to be a huge deal, so they have gone back and are busy redrafting it.

As the current draft stands, it will be up to the companies and the builders of foundational models — anyone using generative AI models — to show that they have identified and mitigated risks in a whole host of categories. These categories of risks include rule of law, democracy, and the environment. When this actually comes into force in 2026, the compliance will be extremely difficult for SMEs and startups. I think the best way I’ve heard this described is that this would be like GDPR on steroids.

The fears around the EU’s AI Act is that it is actually going to stifle innovation. In part, we are already seeing that in Europe — you don’t see huge tech success stories coming out of that region.

There are some gaps and omissions in the new act, namely around requirements to inform people who are subjected to algorithmic assessments. Do you think tech has a role to play in offering solutions where legislation cannot?

Absolutely! It is really interesting to see how tech, enterprises, and private companies are involved in setting the pace of this societal change — and how private enterprises have been deeply focused on how to regulate the harms of and potential misuses of generative AI in our society. I suppose this is because when you consider the last 30 years and the dawn of the internet, we had a certain kind of naivete. We didn’t understand how radically everything was going to be transformed by technology, whether it was our economy, society, or the individual experience – 30 years on that kind of naivete just doesn’t exist.

Now, there’s a deep understanding that generative AI is going to have a huge impact and it’s incumbent on the industry to also ensure these technologies are being rolled out ethically, safely, and responsibly. In part that has to do with the fact that the acceleration of the technology is literally exponential. The scale and pace of the technology — along with a lack of regulators with AI expertise — means that rulemaking is a tricky challenge. There’s space and a need for industry leaders to drive the conversation.

You and I have spoken a lot about the need for authenticated AI — that is, for some way of verifying AI-generated images and their derivatives so that work can be properly attributed and owned — and so that everyone can be fairly credited and compensated for what they create. Do you think the AI Act will drive AI in that direction?

Definitely. That’s one thing that’s really exciting about the EU’s AI Act and the broader conversations that are happening at the level of the nation-state about responsible and ethical uses of AI. The belief that there should be transparency at the core of synthetic generations is really gathering speed. Vermillio’s work is the perfect example of the role industry is playing — the work you are doing [around Authenticated AI] is something that regulators and policymakers are catching up to. They are starting to see value in ensuring that this becomes the operational mode in which you can create AI-generated content.

The drafting of the EU’s AI Act right now stipulates that there should be transparency around where the content came from, but then there’s another step to go which is about authenticated AI to have ownership and fair business models for creators. They haven’t caught up totally, but they are already grasping at the idea of transparency and lineage.

Let’s move now to the UK. Last week, OpenAI announced a plan to open its first foreign office in London. Why do you think Altman and team chose the UK — even knowing they’ll have to contend with post-Brexit talent hurdles?

Generally speaking, the UK tends to punch above its weight when it comes to AI. In part that has to do with the research community here with the excellent universities in the UK — such as Oxford, Cambridge, and Imperial — that have traditionally had great talent pools.

Some of the really cool startups in the Gen AI space are also based in London. There is not as much investment and funding as in the US but you have a good access point to the European ecosystem.

I’m sure that the UK pitched itself as being the low-regulation or smart regulation innovation hub centered just outside of the European Union. Sunak and the rest of the British government are positioning themselves as a stepping stone to the rest of the world — the bridge between the US and China, an entry point to Europe, and a place where startups and tech companies will be well received.

That being said, the biggest hurdle for the UK is the talent question. Immigration has become such a huge problem in the UK; it was one of the main drivers of the Brexit vote. Since Brexit, immigration from the EU has come down. This is problematic for tech companies because now the UK doesn’t have free access to European talent and it’s really difficult to get visas.

You’ve written about how the UK punches above its weight when it comes to AI research and start-ups. What are the conditions on the ground there enabling that outsized innovation?

There are six aspects of the UK that are enabling outsized innovation. These include:

- A pro-innovation & pro-entrepreneurship cultural mindset,

- Strong research institutions,

- Some of the best talent in the tech sector,

- Good investment opportunities,

- A government that has recognized the importance of this area of the sector, and

- An existing presence of some of the monoliths and unicorns in the space.

I’ve also been hearing more about the so-called “AI arms race” between China and the US. Over-hyped or real?

I think it’s definitely an arms race, especially when you consider the direct applications in military and national security. Whoever has the AI dominance is undoubtedly going to be the geopolitical leader in the world because of all the potential economic abundance associated with that position.

That being said, though China is certainly taking generative AI seriously, when you look at what’s been happening in the last few years in generative AI, all the innovation has come from the West. It hasn’t come from China, which is really interesting. And, if you look at the flourishing research and open source community it is centered around the US, Europe, and Eastern Europe.

If you look at the sheer amount of innovation and money that is being poured into AI in the US vs. China, the US is miles ahead. It’s already something like almost $30 billion of private investment in AI until June this year, whereas in China that figure was $4 billion. And if you look at the rest of the world, the US is still the biggest chunk.

We’re starting to see the geopolitical implications of the latest phase of tech competition between the US and China play out in the semiconductor business. Nvidia created their A800 chip to get around the embargo on the export of US chips to China, but it looks like a tranche of regulations is coming into place where not only will the A800 no longer be exported to China but they’re also going to shut down Chinese companies’ abilities to access compute through cloud. Of course, that will have an impact on US companies that are providing services either for cloud or the actual hardware in China.

And China has a different philosophy on how these technologies might be used. A recent piece from Andreesen Horowitz explores that difference of vision. China is interested in using this tech for control of citizens vs. freedom of citizens, which will come to bear on the regulatory front as well.

Yes… [With generative AI] a state would have the ultimate tools on hand to build the techno-authoritarian state.

How might the geopolitical competition shape the development of AI? (i.e., will we see a near-term focus on chip production at the expense of personalized engines?)

I think one of the really interesting, decisive factors is going to be how different public opinion is.

According to global polls on AI, 80% of Chinese civilians believe AI is a good thing, whereas American civilians are wary of AI. I’m sure that this sentiment is only going to increase when the automation of layers of white collar work is going to start to happen. Gen AI is not just about content, it’s also about efficiency and instruction and the way that is going to be rolled out when it comes to knowledge work is going to be potentially seismic.

Goldman Sachs predicted 300 million jobs would be automated or lost, which doesn’t account for how many new jobs will be created. But the headline fact is there is going to be huge disruption when it comes to white collar jobs and knowledge work.

If that becomes a hot political topic, as I suspect it might, you might really start to see public sentiment crystallize around AI being a negative force.

This issue is going to play out on lots of different levels. I think in the geopolitical race to get ahead there’s no doubt that China wants to be able to develop and build its own hardware. They want to be able to build their own chips, but the question is will they be able to do so.

On the other level, which is just creating companies and experiences that consumers and people want, is that going to go full steam ahead? I think so. I don’t think you can put innovation back once it is out of the bottle.

What’s Next

The future of content and fandom

We are already seeing innovative activations that allow fans to engage with content that has been made available by owners and creators. Here are some of the most interesting activations so far in the Authenticated AI space:

In early April, visual artist Chad Nelson created a five-minute animated film, titled “Critterz” using images exclusively generated by the AI tool, DALL-E.

One activation we didn’t include in our Q1 “i” update but is too big not to highlight this time: In February of this year, David Guetta used generative AI to add the “voice” of Eminem to one of his songs — proving to the industry just how well the technology works. Although the record was intended as a prank, it serves as yet another reminder of the need for authenticated AI, across all forms of creative media: text, audio, image, and video.

Move.ai enables creators to capture their own movement and convert that content into motion in the digital world. They recently launched The Invisible solution in partnership with another company called disguise. Invisible makes it faster, easier, and more cost effective to have live motion capture in broadcast, immersive installations, live events, and XR film.

Pixelynx is building a leading music metaverse ecosystem, meaning they are developing an ecosystem of products that will help artists and fans express their ideas and showcase their fandom in new ways. Their project, Korus, gives users access to an AI musical companion that allows creators to have the power to pay, create, and remix music.

We recently partnered with Sony Pictures Entertainment to launch a first-of-its-kind generative AI engine trained on original, authenticated content from the Spider-Verse films — marking the first time a major studio is using generative AI at this scale.

Make your own Spidersona here if you haven’t already!

Vermillio is the first – and only – generative AI platform built to empower creators and protect their work.

Using our platform, artists, studios, and intellectual property owners can track and authenticate generative AI as well as the use of their work by others in order to receive fair credit and compensation.

Exercising control over their IP even as it is transformed by AI, creators large and small can engage directly with their fans, quickly and easily bringing new and immersive experiences to life in the style of the world’s most beloved IP.

Share this